7.9.2020, 10:00 CEST:

Alexander Ako Khajetoorians, Radboud University

What can we “learn” from atoms?

In machine learning, energy-based models are rooted in concepts common to magnetism, like the Ising model. Within these models, plasticity, learning, and ultimately pattern recognition can be linked to the dynamics of coupled spin ensembles. While this behavior is commercially emulated in software, there are strong pursuits to implement these concepts directly and autonomously in solid-state materials. To date, hybrid approaches, which often use the serendipitous electric, magnetic, or optical response of materials, emulate machine learning functionality with the help of external computers. Yet, there is still no clear understanding of how to create machine learning functionality from fundamental physical concepts in materials, like hysteresis, glassiness, or spin dynamics.

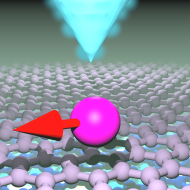

Based on scanning tunneling microscopy, magnetic atoms on surfaces have become a model playground to understand and design magnetic order. However, these model systems historically have been probed in limits that are not usable for machine learning functionality. In this talk, I will illustrate new model platforms to realize machine learning functionality directly in the dynamics of coupled spin ensembles. I will first review the concept of energy-based neural networks and how they are linked to multi-modal landscapes and the physics of spin glasses. I will then highlight a new example based on the recent discovery of orbital memory: an atomic-scale ensemble that mimics the properties of a Boltzmann machine. I will illustrate the creation of atomic-scale neurons and synapses, in addition to new learning concepts based on the separation of time scales and self-adaptive behavior. I will also discuss recent cutting-edge developments that enable magnetic characterization in new extreme limits and how this platform may be applied toward autonomous adaption and quantum machine learning.